by Ariella Young & Anthony Wenyon

We live in an age of disruption. We see it happening so rapidly sometimes it’s hard for us to even keep up. Look away, and suddenly everything’s different.

Behind the scenes, it’s not always like this. The seeds of disruption are often planted long before. The key is spotting the signs.

From the late 80’s, the controls of a car have been transitioning from mechanical to electric. Yes, we heard about this … how it makes cars cheaper and more reliable. It happened with brakes and your accelerator pedal too. But what was hiding here is that electric controls = computers can now become involved in driving.

Remember your old mobile, that could only send text’s and make calls? Text was great, but it didn’t really change your life did it. But it was the first little sign of what happened back in the 90’s — mobile phones went from analogue to digital signal processing. This change was hiding; we didn’t really see the effects of it until phones became ‘smart’ with internet, cameras and apps. This disruption bit happened very quickly, and now our phones have genuinely made our lives different.

You might think computers driving is happening slowly, but we are now just about at the disruption bit. The seeds have long been planted and its all about to happen before your eyes. DON’T look away now. You will soon be ridesharing in an autonomous car.

Computers driving

So, now the controls of your car are electric …

For computers to make decisions about steering, accelerating and breaking a car, they will, however, need a guide to tell them what to do and when.

Like a decision tree — “in this situation, you need to do this, and so on” … “if there is a hazard in front of you, but not in the lane next to you… slow down, then change lane… ”

The difficult bit for driverless cars is not actually the guide; it’s determining what situation a car is in.

Reading the environment

For this a car needs sensors to read its environment…where is the road going? where are the other cars? what do traffic signals say and what’s adjacent to the road (on the pavement, or at joining roads)?

Although sensor technology is very complex, it is progressing very rapidly — whether cameras that detect objects based on their outline and colour, or radar and ‘radar like’ systems that map the forms and shapes surrounding a car.

As such, sensors are actually a (relatively) easy part of the driverless car challenge…

Interpreting the environment

The difficult bit is for a computer to interpret the data from its sensors to determine what situation the car is in… I.e. is the object on the pavement a pedestrian? Is it likely to walk into the road? When might it be in the road? Which part of the road will it be in?

If, for example, a computer can determine from sensor data that a pedestrian is likely to be in the road, say 30m ahead, it then has a defined Hazard Situation. From this point, it’s straightforward for the decision tree guide to tell the car to slow down or stop altogether.

The real technology race — machine learning

Companies like Google (their car division, Waymo), UBER and Tesla are racing to solve this last (very difficult) interpretation problem. Their general approach is to gather huge amounts of data in the hope that they can use it to teach computers to recognize the millions and millions of different situations that a car may be in. For example, every Tesla on the road is sending real-time data to Tesla HQ from its cameras and other sensors.

With such data, they can begin to teach computers. For example, you can tell a computer that a certain shape in an image is a pedestrian. You can do this many times until it is able to recognize a pedestrian by its self. Then (the interesting bit) computers can ‘watch’ millions of miles of sensor data, picking out the pedestrians, and then, monitoring what they tend to do, in particular, picking out context’s in which they typically end up in the road. This process is a type of Machine Learning.

So, where are we now?

With no company having cracked this machine learning yet, cars can’t recognize enough ‘situations’ to be truly safe on the road. Waymo (Google) does have a fleet of cars without drivers going around a town in Arizona, but this is a pretty benign environment. It’s small geography with open roads and very little traffic.

It is, however, just a matter of time before driverless cars are safe, and time moves very quickly just before disruption hits.

Alright, so when you hear tech guys talking about autonomous cars on the news or at some trendy juice bar, you’ll probably hear chat about different levels of autonomy.

So let’s first explain these levels … we’ll use them to define what the industry means by “a fully autonomous car”.

The US National traffic highway and Safety Administration introduced a stage-based model — from 0 to 5 — that has been adopted internationally:

Level 0: Automated system issues warnings and may momentarily intervene but has no sustained vehicle control.

Level 1: (“hands on”) The driver and the automated system share control of the vehicle. Examples are systems where the driver controls steering and the automated system controls engine power to maintain a set speed (Cruise Control) or engine and brake power to maintain and vary speed (Adaptive Cruise Control or ACC). The driver must be ready to retake full control at any time.

Level 2: (“hands off”) The automated system takes full control of the vehicle (accelerating, braking, and steering). The driver must monitor the driving and be prepared to intervene immediately at any time if the automated system fails to respond properly. The shorthand “hands off” is not meant to be taken literally. In fact, contact between hand and wheel is often mandatory during Level 2 driving.

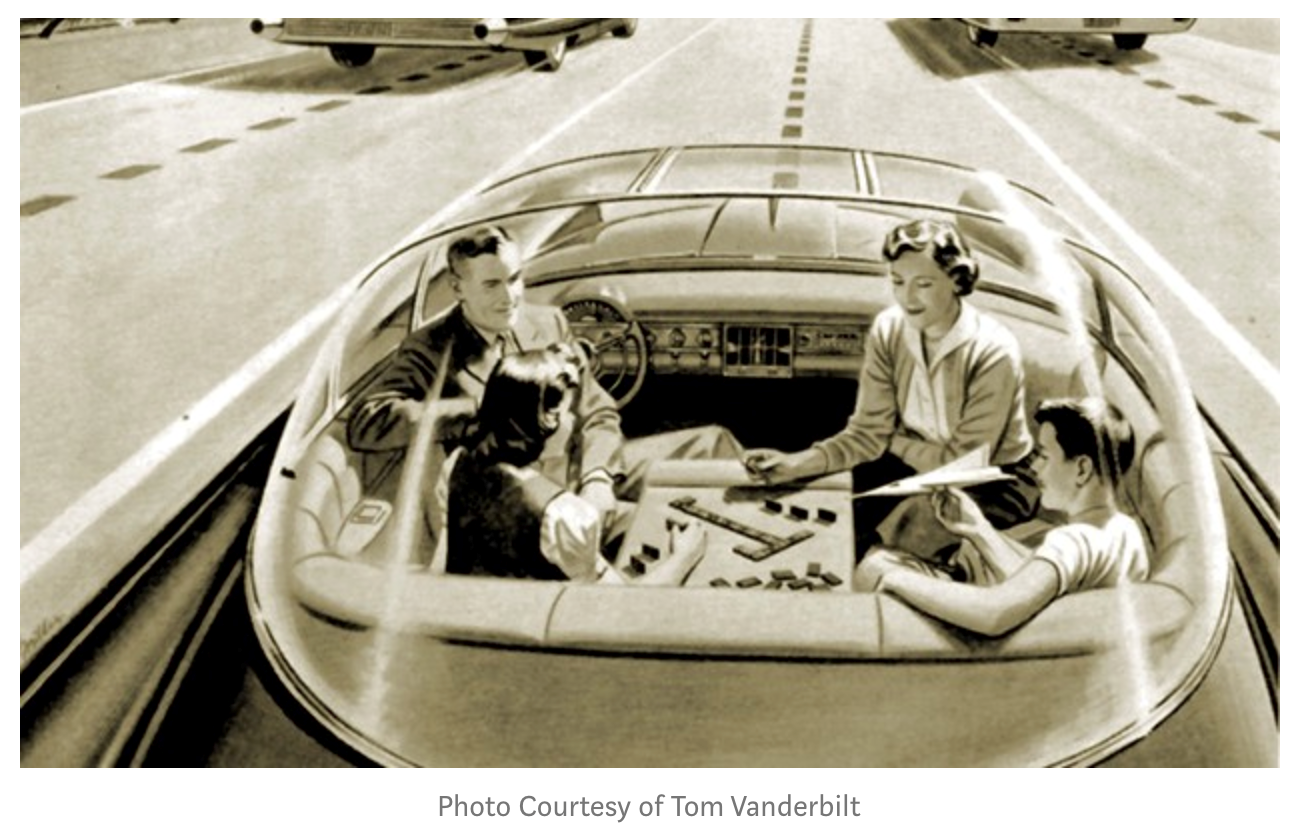

Level 3: (“eyes off”) The driver can safely turn their attention away from the driving tasks, e.g. the driver can text or watch a movie. The vehicle will handle situations that call for an immediate response, like emergency braking. The driver must still be prepared to intervene within some limited time, specified by the manufacturer when called upon by the vehicle to do so.

There are some safety concerns here as drivers may be tempted to switch off completely. Ford, for example is keen to jump directly to Level 4.

Level 4: (“mind off”) As level 3, but no driver attention is ever required for safety, e.g. the driver may safely go to sleep or leave the driver’s seat. Self-driving is, however, supported only in limited spatial areas or under special circumstances. Outside of these areas or circumstances, the vehicle must be able to safely abort the trip, e.g. park the car, if the driver does not retake control. The car may be programmed not to drive in unmapped areas or during severe weather such as snow

Level 5: means full automation in all conditions where you can sleep or read or stream Stranger Things on Netflix wherever you are.

Most top end luxury cars such as from BMW, Mercedes and Lexus are now at the early stages of Level 2 automation. A computer can steer them within clearly marked lanes whilst handling stop-go in traffic or maintaining a set distance from the car ahead on a highway.

Tesla is at the top end of Level 2. Waymo has got to Level 4, but only in their small Arizona test area. Progress on Machine Learning will unlock more of Level 4, and, ultimately, Level 5, but there are a few other obstacles we’ll hit beforehand…

What other factors limit the level of automation we can get now?

Roads & other infrastructure: Road markings are a key source of information about a car’s environment, but if they are faded, poorly executed or obscured, sensors will not pick up sufficient data for computers to interpret where the road is. We need more machine learning progress before unmarked roads can be interpreted consistently.

Roads could, however, be actively sending data to self driving cars to avoid the need for ‘interpretation’, but few roads are ‘active’ at this time. It would also be unwise to rely on roads to transmit such vital information…just think of your temperamental Wi-Fi!

The same goes for cars transmitting location data to each other. Great if you can get it, but it can’t be relied on. It will, in any case, take a while for enough cars to be ‘transmitting’.

In general, however, the better the road markings and the greater the availability helpful ‘guiding’ data, the more Level 2 driving we will be able to do. They won’t get us to higher levels though.

Weather conditions: This is where the current limitations of sensors come in. They are great when there is sunshine, but tech progress is still needed for when camera images are distorted by snow, heavy rain or fog. Even radar is effected by atmospheric factors, as is LiDar (like radar but uses light pulses instead of radio wave pulses). Combining data from multiple types of sensors has, however, been helpful here. For example, whilst LiDar produces very detailed spatial data in good weather, sonar sensors can take over when fog blocks a LiDar light pulse from reaching objects around a car. Things are far from perfect though. Waymo only operates its vehicles in light rain for this reason. Anymore and the fleet is grounded. Still, solving the limitations of sensors alone won’t get us past level 2.

Regulation & Legal: Only a few cities in the US allow cars without drivers. For cars to be used at level 3 or above, state and federal level approvals are needed. These will come as the technology improves (mainly the machine learning piece but also sensors), but will inevitably be subject to politics, lobbying and special interest groups.

Having an adequate product liability legal framework will also be important to such approvals, as well as for obtaining insurance on driverless vehicles.

Apportioning liability in accident situations could, however, be very difficult. Without a driver, the vehicle manufacturer/assembler or sensor supplier could be liable. More so, the software writer who pre-determines the basis for a car’s ‘decision making’ could be facing claims. Legal frameworks and precedent will be important here.

Until the necessary regulatory and legal enablers are in place, a human will likely be required to keep their eyes on the road and hands at least near the wheel (this is really only level 2 stuff…).

If technology solves the limitations of sensors, and machine learning allows cars to consistently make the right decisions in effectively all situations, then the regulatory and legal approvals will be the factor that gets us to Level 4 & 5 cars.

So, you may be thinking…what’s Level 3 for? …its controversial (“eyes off, mind on”) …and only likely to get approved (for the general public) at the same time as Level 4? Well, that would be like trying to get a PhD without having ever attended university. It’s the stage where all the real testing will probably take place…where we actually see where machine learning has got us. Indeed, the government may not ‘award the PhD’ until they have seen lots (millions and millions of miles!) of controlled, safe level 3 driving all over the country by brave Waymo, Tesla and Uber employees.

Most people in the industry believe Level 4 is where we will see the real disruption. This will be disruption like we’ve never seen before. Forget smartphone life changes…this will be another Level ;)